How do Hyperglance Automations work?

Setup

During deployment of Hyperglance's automation software, a number of cloud-based resources are deployed:

For AWS:

- S3 Bucket

- Lambda Function

- Role under which the automations run

- Policies attached to the role and S3 bucket

These are configured with the principle of least privilege. The Lambda function is configured to use the S3 bucket as a trigger.

For Azure:

- Storage Account

- Function App, with an App Service Plan

How are Automations Triggered?

Within Hyperglance automation actions may be triggered in two ways:

1. Manually on one or more resources

This is achieved from the Advanced Search page using the Run Action button:

![]()

2. Configured to run as part of a rule

When the rule runs Hyperglance will execute the automated action. This is done during the creation or edit of a rule using the Add Automation button:

What happens after an Automation is triggered?

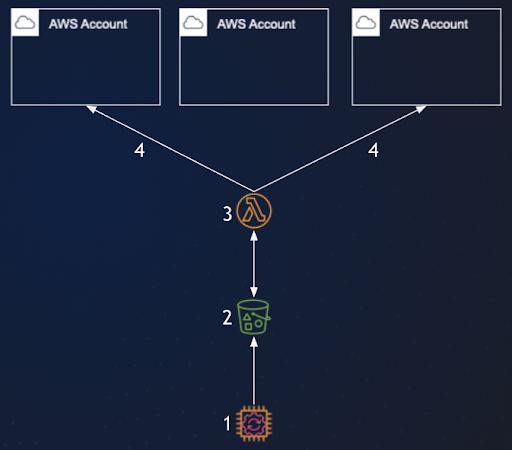

Once triggered, Hyperglance will generate an event JSON file containing all the information required to run the automation.

1. This JSON file is placed as a blob into the AWS S3 bucket or Azure Storage Account.

2. The blob storage fires an event to signal the addition of a new file.

3. For AWS this event triggers the Lambda function. For Azure it triggers the Function App.

4. The triggered function (written in Python) reads the JSON file and performs the requested actions against the target resources.

It connects into different AWS accounts (using AssumeRole) or Azure Subscriptions as necessary:

How Does Cross-Account Usage Work in AWS?

For resources outside of the account in which the lambda resides, a role must be configured which allows for the lambda function from the lambda account (only) to assume access.

The various actions are then able to operate as if the cross-account resources are local to the account under which the lambda runs.

How Does Cross-Subscription Usage Work in Azure?

The managed identity for the Function app was granted access to all subscriptions you listed in `subscriptions.csv` when deploying the Terraform stack. So the IAM permissions granted to it allow it to manage resources in just those subscriptions.